Streaming video from GStreamer to Unreal Engine

07 Feb 2022It is straightforward to display video content on 3D surfaces within a game in Unreal Engine by utilizing the built-in Media Framework. Supported video sources include static video files as well as a limited number of streaming formats. But what if the streaming video source that you want to display isn’t compatible with Unreal? One option is to use an external program that makes the video available in a format that Unreal can handle. In this post I demonstrate how to do this using the GStreamer multimedia framework.

Besides being a fun exercise in using Unreal Engine together with GStreamer, this may seem a little pointless at first glance. But I have something more advanced in sight in later posts, so please stay tuned.

Failed experiments

Before I dive into the “how to” part of this post, it’s worth mentioning the initial approaches that didn’t work. Although Unreal’s Media Framework documentation seems pretty thorough, it is actually vague on the details of which codecs and stream formats are supported, so I needed to do some experimentation. My hope was that I could get away with simply using gst-launch on the command line to quickly produce a stream that was acceptable to Unreal rather than having to write a program myself (laziness being a virtue), but that was not to be.

My first attempt involved simply producing an HLS stream. This works great in a basic static web page and also plays in VLC, but Unreal, sadly, is not able to render this stream despite the documentation listing HLS as a supported format. This is not meant as a critique of Unreal Engine, but as an illustration of how stated compatibility isn’t always what it seems. The devil is in the details.

Another attempt was to provide a UDP stream from Gstreamer with a hand-crafted SDP file to describe the stream based on this approach. While this works in VLC, browsers were having none of it, so I was not surprised to find that Unreal couldn’t render this stream either.

The remainder of this post describes a “happy path” to a working solution disregarding various other missteps that may or may not have occurred along the way.

Components

For this project I’ll be building two components:

- A command-line streamer program that uses GStreamer to provide an RTSP stream of a video source

- An Unreal game that renders the video stream onto some objects in a 3D scene

Streamer program

The program will use gst-rtsp-server, which is a GStreamer library for building an RTSP server. One of the great things about GStreamer is that bindings are available for most popular programming languages, so you can pick your language of choice. Often if I am building a prototype I will write it either in Java (because it’s the language I am most experienced in) or Python (because it’s light on syntax), but for this task I decided to use my current most-intrigued-by language: Rust.

I was surprised by how little code is needed to build an RTSP server using gst-rtsp-server. The following is the complete Rust program based heavily on this example code:

use std::env;

use gstreamer_rtsp_server::prelude::*;

use anyhow::Error;

use derive_more::{Display, Error};

#[derive(Debug, Display, Error)]

#[display(fmt = "Could not get mount points")]

struct NoMountPoints;

#[derive(Debug, Display, Error)]

#[display(fmt = "Usage: {} LAUNCH_LINE", _0)]

struct UsageError(#[error(not(source))] String);

fn main() -> Result<(), Error> {

gstreamer::init()?;

let args: Vec<_> = env::args().collect();

if args.len() != 2 {

return Err(Error::from(UsageError(args[0].clone())));

}

let main_loop = glib::MainLoop::new(None, false);

let server = gstreamer_rtsp_server::RTSPServer::new();

let mounts = server.mount_points().ok_or(NoMountPoints)?;

let factory = gstreamer_rtsp_server::RTSPMediaFactory::new();

factory.set_launch(args[1].as_str());

factory.set_shared(true);

mounts.add_factory("/test", &factory);

let _id = server.attach(None)?;

println!(

"Stream ready at rtsp://127.0.0.1:{}/test",

server.bound_port()

);

main_loop.run();

Ok(())

}The complete code project is available on Github.

The resulting program is very flexible. The program itself only defines the behaviour of the RTSP server with gst-rtsp-server doing all the heavy lifting and allows you to specify the rest of the Gstreamer pipeline on the command line using gst-launch syntax. This makes it very easy to experiment with different video sources, codecs, transformations, etc. Here is an example command-line that provides an RTSP stream showing a bouncing ball, where the foreground and background colours are swapped every second:

gst-rtsp-rs "( videotestsrc pattern=ball flip=true ! videoconvert ! openh264enc ! rtph264pay name=pay0 pt=96 )"It turned out to be important to use openh264enc above instead of x264enc, because Unreal cannot understand the stream provided by the latter.

To view the stream produced by the program above, the following gst-launch command can be used. It simply connects to the RTSP stream and displays it in a new window. You could also open the stream address (rtsp://127.0.0.1:8554/test) in e.g. VLC. I’ll get to Unreal in the next section.

gst-launch-1.0 rtspsrc location=rtsp://127.0.0.1:8554/test latency=100 ! queue ! rtph264depay ! h264parse ! avdec_h264 ! autovideosinkThe stream looks something like this:

Unreal game

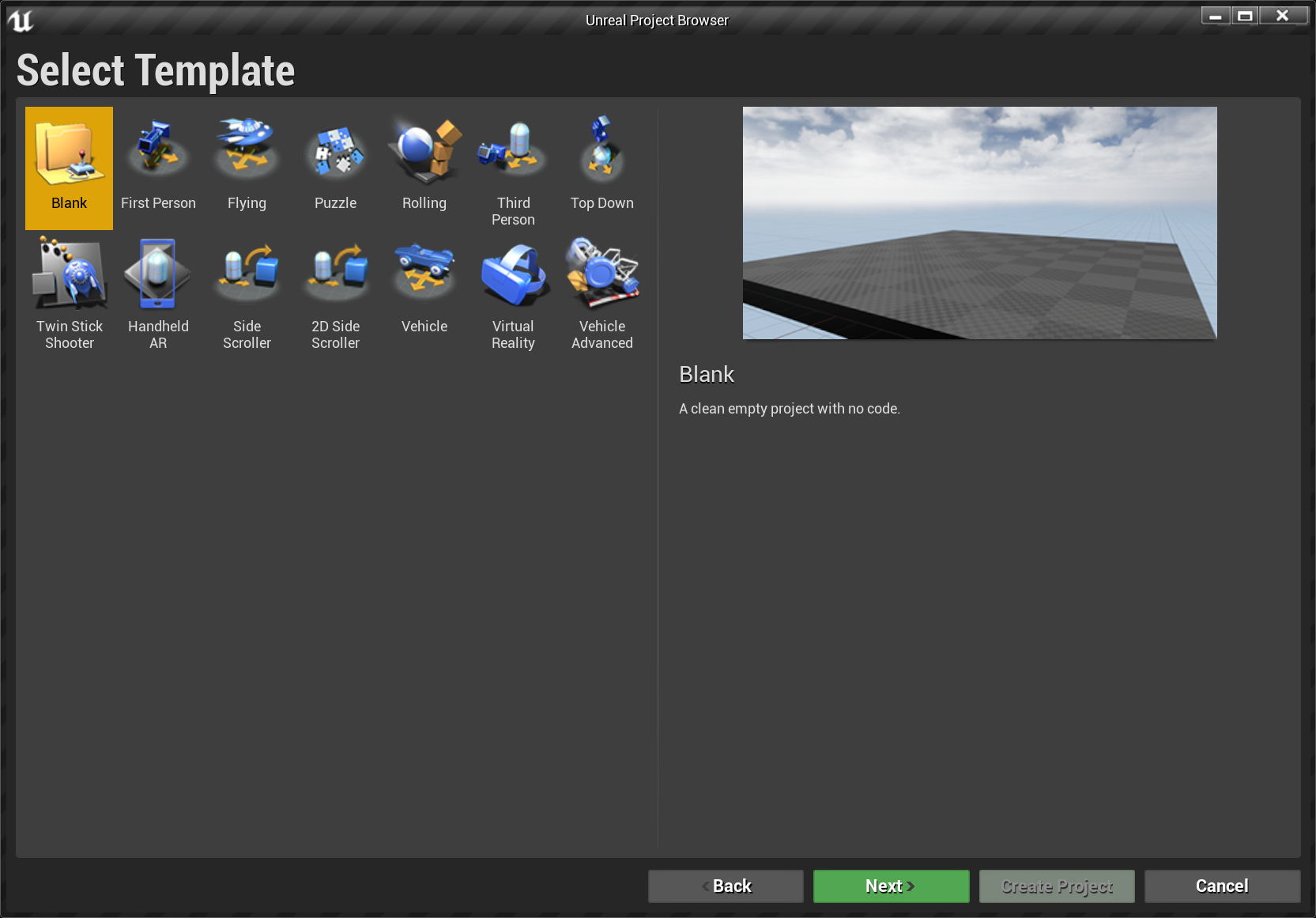

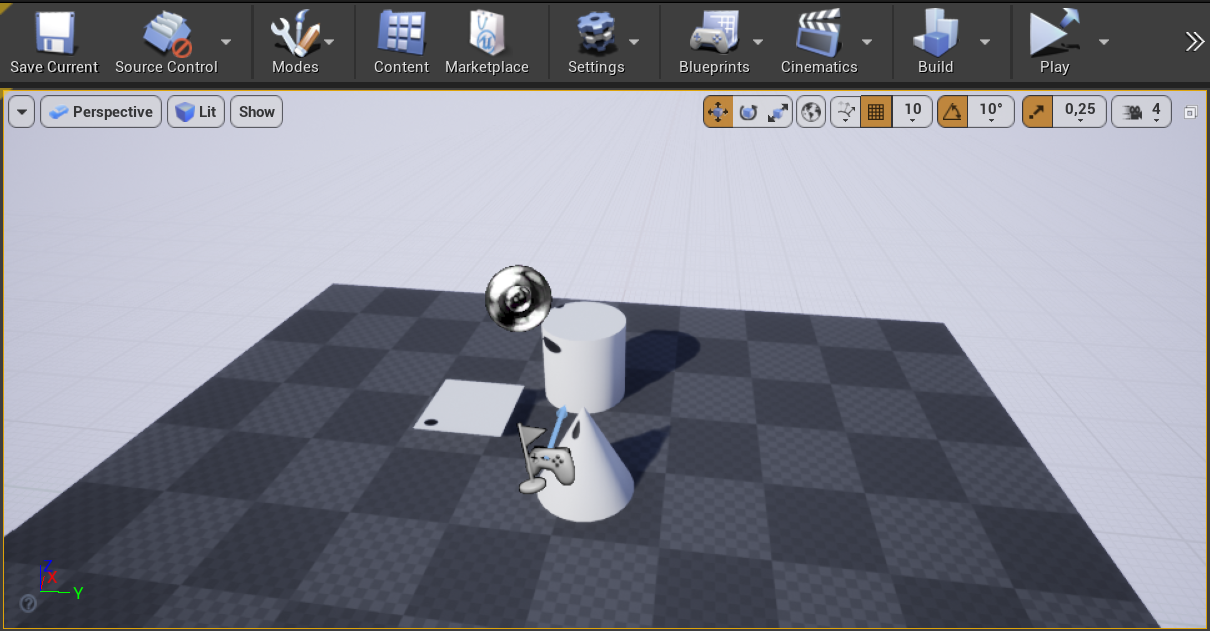

I began with a new Unreal project based on the blank template:

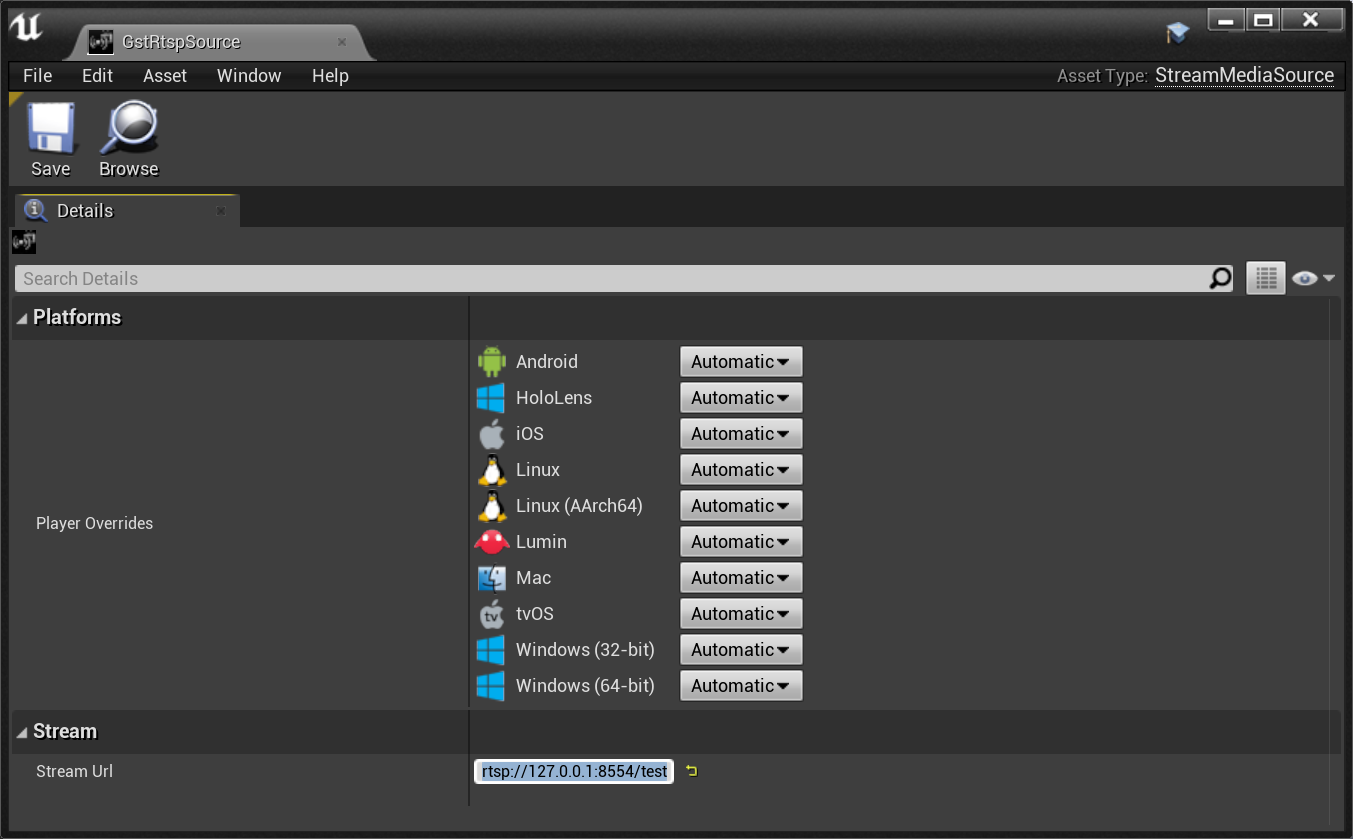

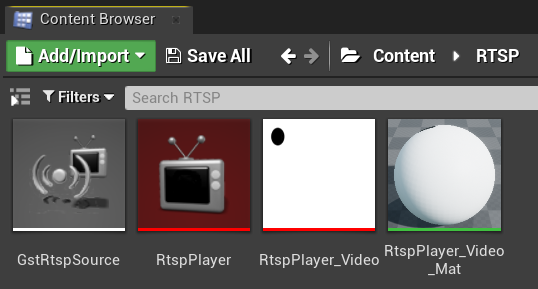

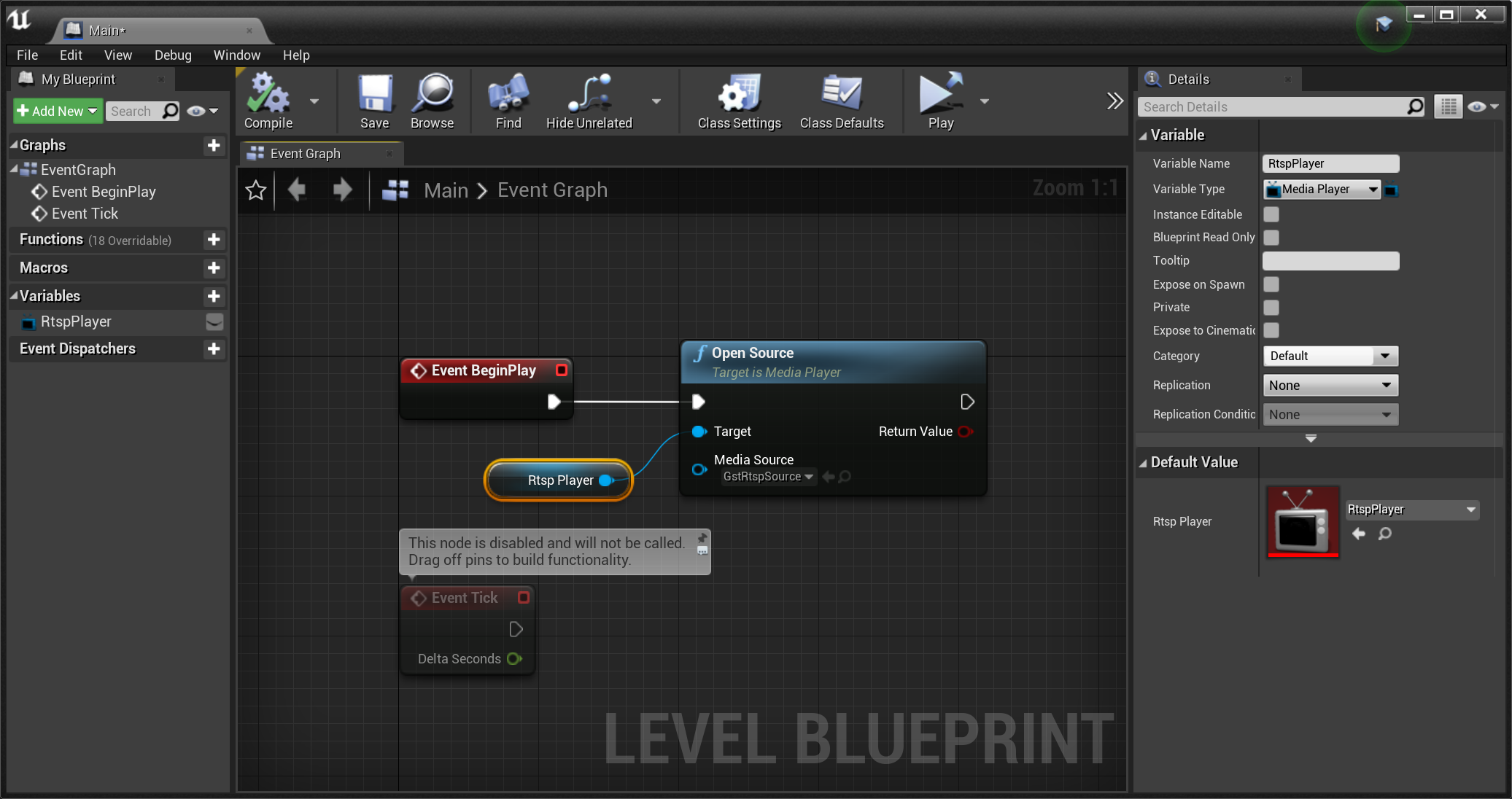

I created a Streaming Media Source(which points to the RTSP URL), a Media Player, associated Media Texture, and Material:

I added a plane and a couple of 3D objects to the scene and applied the video player’s material to them.

Finally I added a BeginPlay event handler to the level blueprint to start the video when the game starts:

The completed project will receive video from the local streaming source and render it in game. Here is a sample recording of the game with the GStreamer RTSP server streaming the animated ball video:

Live video source

Finally, let’s make this a little more interesting by using a live video source in the GStreamer pipeline. I’ll use my webcam as the input, so that I can see myself in-game. I don’t have to change much in the GStreamer pipeline to make this happen. It’s enough to replace the videotestsrc with ksvideosrc:

gst-rtsp-rs "( ksvideosrc ! videoconvert ! openh264enc ! rtph264pay name=pay0 pt=96 )"Not very interesting to watch me moving my head around while trying to navigate in the game, but nonetheless here is a short recording of the result:

Congratulations for making it this far! If you’re the first person to read this and let me know about it, I’ll bring you cake the next time we meet.