GStreamer pipeline running inside Unreal Engine

30 Mar 2022Last time I used GStreamer to produce a video stream that can be consumed in an Unreal project. I found that in order for this to work it was necessary to produce an RTSP stream, which is a little more complicated than I may have wished. In the meantime I stumbled upon the nifty UE4 GStreamer plugin that allows you to execute a GStreamer pipeline within Unreal Engine itself and render the output directly to a Static Mesh, i.e. to any desired surface.

Using this plugin gives a lot more flexibility in how to stream video into Unreal by eliminating the limitation of using Unreal’s built-in streaming formats. I’ll make good use of this in the next post, but for now here’s a brief example of using the plugin with a static pipeline.

All credit for this plugin goes to its author, who also has a bunch of other interesting projects on Github. Go check them out!

Prerequisites

To use the plug-in all you need are a working GStreamer installation (I’m using version 1.20.1) and Unreal Engine 4.26. After cloning the repository you need to make a couple minor adjustments to the source code so that the plugin can find GStreamer (this is clearly explained in the repository linked above), and then you’re ready to play.

Using the plugin

The repository includes sample AppSink and AppSrc Actor blueprints. When configured with a GStreamer video pipeline, the AppSink renders the output of that pipeline to the surfaces of the Actor. By default it renders to both sides of a plane. The AppSrc uses Unreal’s built-in Scene Capture component to capture a rendering from within the Unreal game and use it as input to a GStreamer pipeline. Combining the two, as is done in the example project, allows you to render the captured Unreal scene somewhere else within that very scene - very cool! Below I’ll only illustrate how to use the AppSink just to show how easy it is to get started with.

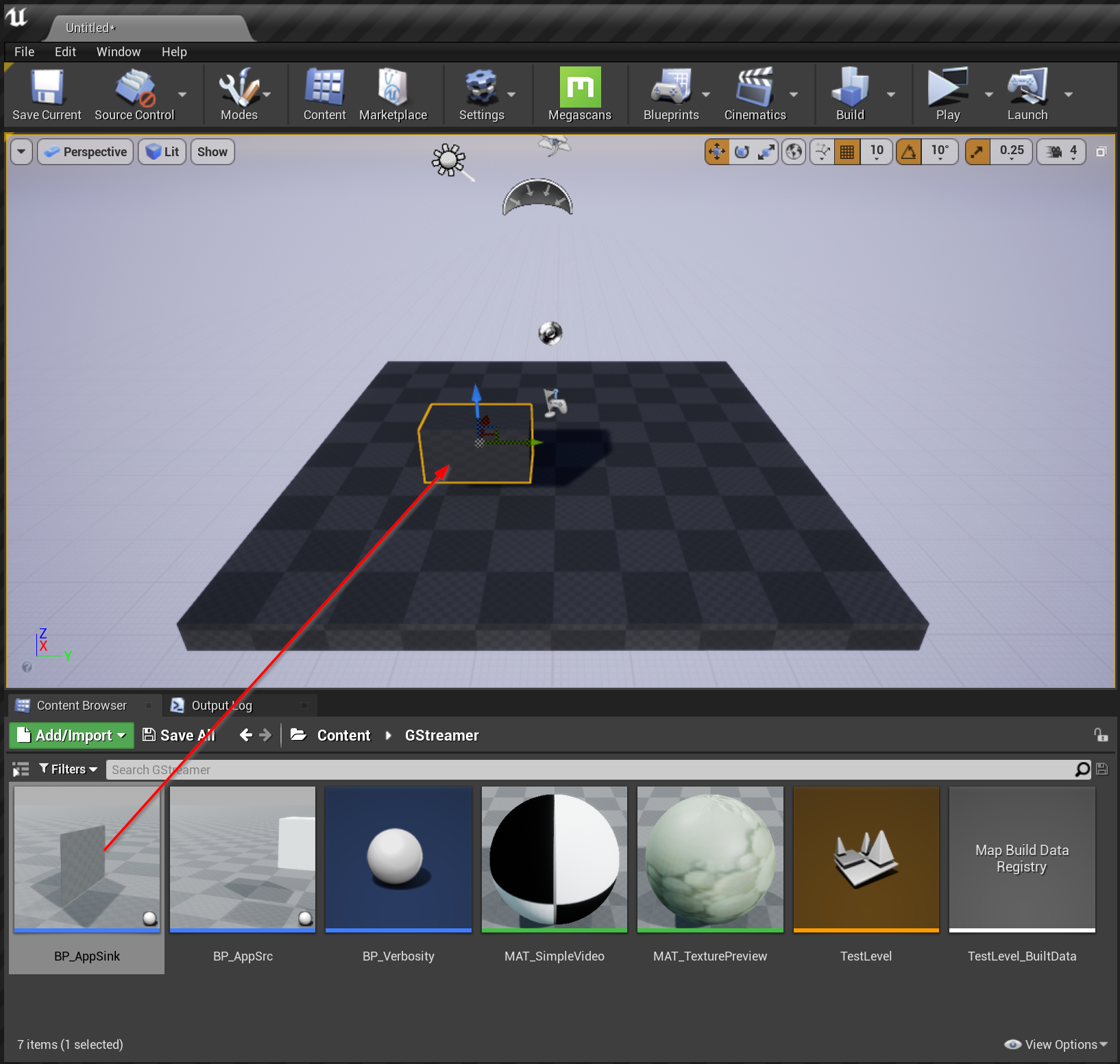

I began with an empty scene and simply dragged a BP_AppSink component into the scene and adjusted it from its default flat plane to a 3D cube:

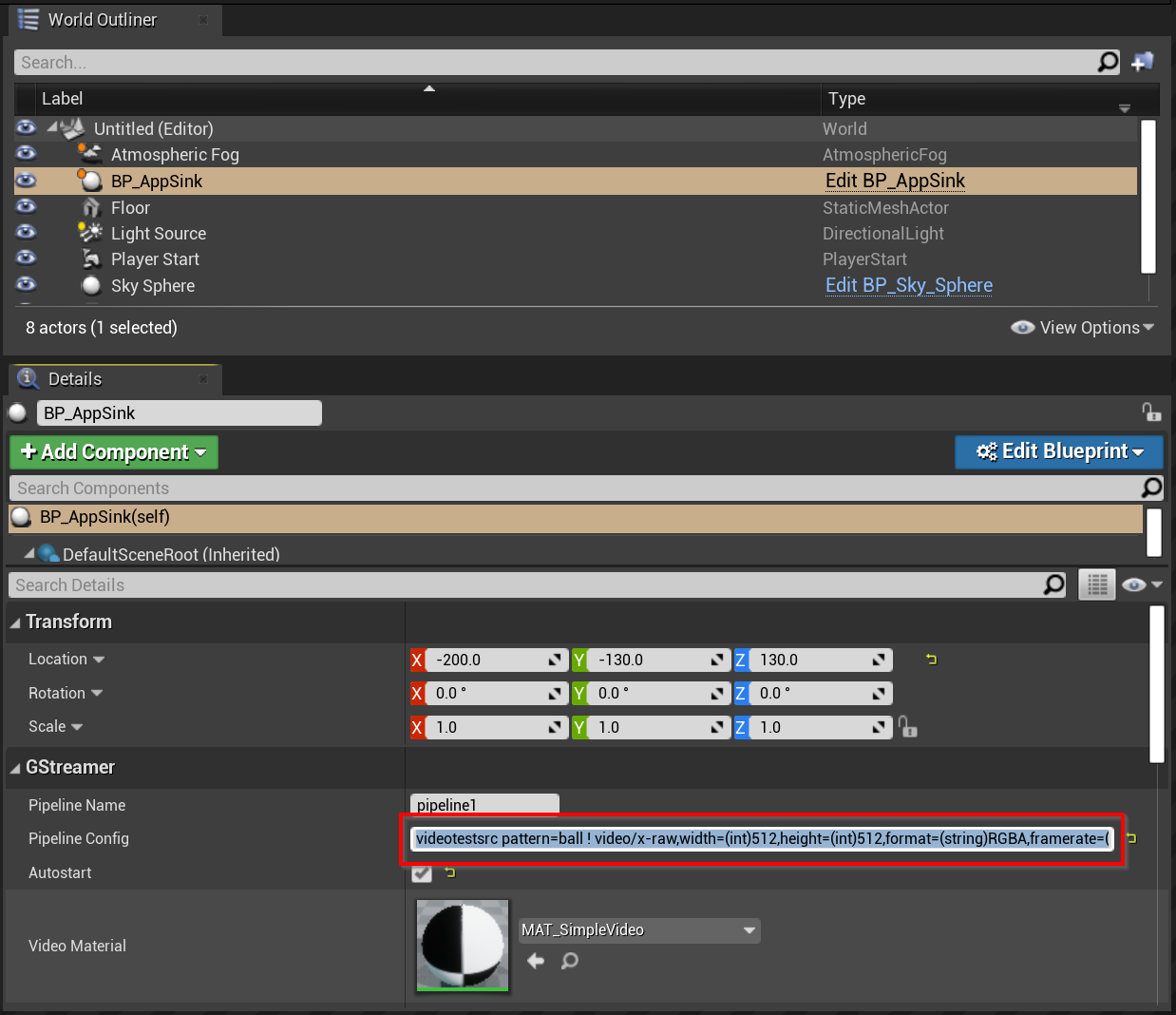

Then I configured the pipeline using a testvideosrc to produce a video of a bouncing ball:

The full pipeline is quite simple and just configures the video test source, tells which video format to user, and passes the output to the appsink:

videotestsrc pattern=ball ! video/x-raw,width=(int)512,height=(int)512,format=(string)RGBA,framerate=(fraction)30/1 ! appsink name=sink

Here’s a short video clip of the project running: